In this article, we will take a detailed look at the use of language models in AI-based threat detection. We'll examine how these models can be used to detect polymorphing code and malicious prompts that bypass EDR filters. We’ll also talk about why these techniques are so effective, and what may happen if they aren't used correctly.

Polymorphic Code

Polymorphic code is a type of malware that changes its behavior to avoid detection. Polymorphic code can be detected through machine learning, which is used to identify patterns in text. The use of language models allows for this detection because they are able to identify patterns in text by looking at the length of words and phrases, as well as their frequency within the corpus (collection) being analyzed.

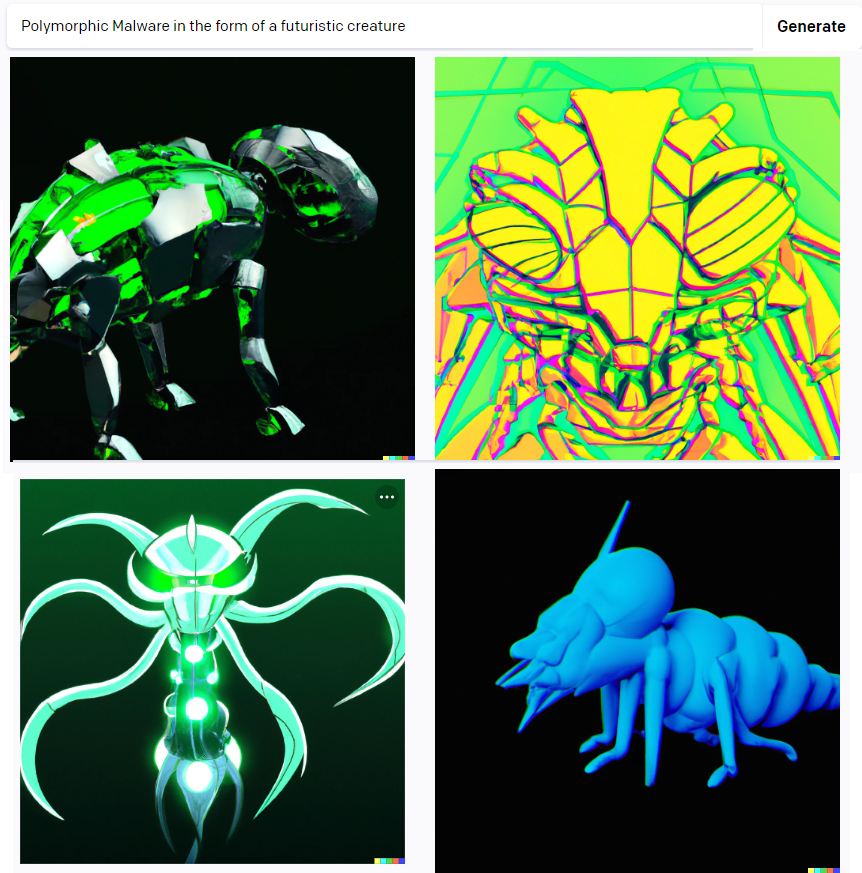

Image Courtesy of DALL-E

The Importance of Language Models in AI Detection

Language is a powerful tool. It's how we interact with each other and the world around us. It can be used to express our thoughts and emotions, or simply to get a point across.

Language models are an essential part of artificial intelligence (AI) detection systems because they help computers understand how humans communicate with one another--and what those interactions mean.

When you're trying to detect malware or other malicious behavior on your computer or network, it's important that your security software understands human language as well as possible so it doesn't flag false positives or miss threats altogether!

Malicious Prompts that Bypass EDR Filters

Polymorphic code is a type of malicious software that is capable of changing its appearance. This makes it difficult for traditional antivirus products to detect and block.

The OpenAI API client, an otherwise harmless API, was recently used in a polymorphic code simulation to bypass EDR filters. The simulation involved using the OpenAI API client as part of a malicious prompt which would then execute python code (a second layer) without needing any user interaction other than clicking on "OK" - Jeff Sims Principal Security Engineer - HYAS InfoSec / @1337_Revolution / —- kick ass research which is featured here on Dark Reading. says “I created a simple proof of concept (PoC) exploiting a large language model to synthesize polymorphic keylogger functionality on-the-fly, dynamically modifying the benign code at runtime — all without any command-and control infrastructure to deliver or verify the malicious keylogger functionality”

https://www.darkreading.com/endpoint/ai-blackmamba-keylogging-edr-security

And you can find his whitepaper here!

Language models will be one of the most powerful tools for detecting advanced persistent threats.

Language models are a type of NLP (natural language processing). They can be used to detect malicious prompts by comparing them with known good prompts. They are also used in ChatGPT, as well as other systems such as DeepHack and A2I2.

Conclusion

The use of language models in AI detection is a powerful tool for detecting advanced persistent threats. Polymorphic code has been around since the dawn of computing, but now it's being used in new and innovative ways that make it even harder to detect. Language models can help us identify these threats before they cause damage by detecting malicious prompts that bypass EDR filters or other security measures.